15-14 Vol. 1

PROGRAMMING WITH INTEL® AVX-512

ŌĆó

Explicitly-unaligned SIMD load and store instructions accessing 64 bytes or less of data from memory (e.g.,

VMOVUPD, VMOVUPS, VMOVDQU, VMOVQ, VMOVD, etc.). These instructions do not require the memory

address to be aligned on a natural vector-length byte boundary.

ŌĆó

Most arithmetic and data processing instructions encoded using EVEX support memory access semantics.

When these instructions access from memory, there are no alignment restrictions.

Software may see performance penalties when unaligned accesses cross cacheline boundaries or vector-length

naturally-aligned boundaries, so reasonable attempts to align commonly used data sets should continue to be

pursued.

Atomic memory operation in Intel 64 and IA-32 architecture is guaranteed only for a subset of memory operand

sizes and alignment scenarios. The guaranteed atomic operations are described in Section 7.1.1, ŌĆ£Task StructureŌĆØ

of the Intel┬« 64 and IA-32 Architectures Software DeveloperŌĆÖs Manual, Volume 3A. AVX and FMA instructions do

not introduce any new guaranteed atomic memory operations.

AVX-512 instructions may generate an #AC(0) fault on misaligned 4 or 8-byte memory references in Ring-3 when

CR0.AM=1. 16, 32 and 64-byte memory references will not generate an #AC(0) fault. See Table 15-7 for details.

Certain AVX-512 Foundation instructions always require 64-byte alignment (see the complete list of VEX and EVEX

encoded instructions in Table 15-6). These instructions will #GP(0) if not aligned to 64-byte boundaries.

15.8

SIMD FLOATING-POINT EXCEPTIONS

AVX-512 instructions can generate SIMD floating-point exceptions (#XM) if embedded ŌĆ£suppress all exceptionsŌĆØ

(SAE) in EVEX is not set. When SAE is not set, these instructions will respond to exception masks of MXCSR in the

same way as VEX-encoded AVX instructions. When CR4.OSXMMEXCPT=0, any unmasked FP exceptions generate

an Undefined Opcode exception (#UD).

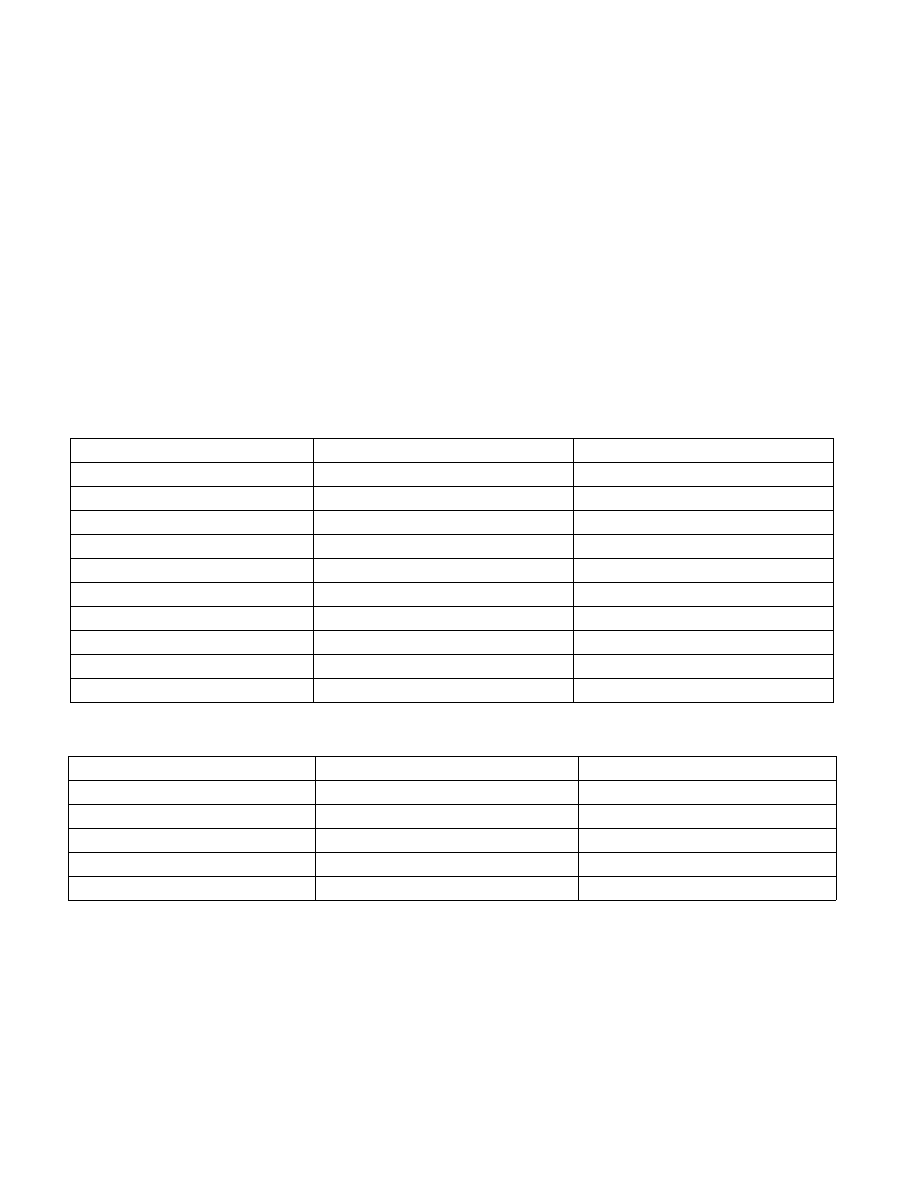

Table 15-6. SIMD Instructions Requiring Explicitly Aligned Memory

Require 16-byte alignment

Require 32-byte alignment

Require 64-byte alignment*

(V)MOVDQA xmm, m128

VMOVDQA ymm, m256

VMOVDQA zmm, m512

(V)MOVDQA m128, xmm

VMOVDQA m256, ymm

VMOVDQA m512, zmm

(V)MOVAPS xmm, m128

VMOVAPS ymm, m256

VMOVAPS zmm, m512

(V)MOVAPS m128, xmm

VMOVAPS m256, ymm

VMOVAPS m512, zmm

(V)MOVAPD xmm, m128

VMOVAPD ymm, m256

VMOVAPD zmm, m512

(V)MOVAPD m128, xmm

VMOVAPD m256, ymm

VMOVAPD m512, zmm

(V)MOVNTDQA xmm, m128

VMOVNTPS m256, ymm

VMOVNTPS m512, zmm

(V)MOVNTPS m128, xmm

VMOVNTPD m256, ymm

VMOVNTPD m512, zmm

(V)MOVNTPD m128, xmm

VMOVNTDQ m256, ymm

VMOVNTDQ m512, zmm

(V)MOVNTDQ m128, xmm

VMOVNTDQA ymm, m256

VMOVNTDQA zmm, m512

Table 15-7. Instructions Not Requiring Explicit Memory Alignment

(V)MOVDQU xmm, m128

VMOVDQU ymm, m256

VMOVDQU zmm, m512

(V)MOVDQU m128, m128

VMOVDQU m256, ymm

VMOVDQU m512, zmm

(V)MOVUPS xmm, m128

VMOVUPS ymm, m256

VMOVUPS zmm, m512

(V)MOVUPS m128, xmm

VMOVUPS m256, ymm

VMOVUPS m512, zmm

(V)MOVUPD xmm, m128

VMOVUPD ymm, m256

VMOVUPD zmm, m512

(V)MOVUPD m128, xmm

VMOVUPD m256, ymm

VMOVUPD m512, zmm